In the realm of Stable Diffusion, the parameters of batch size and batch count are crucial for controlling the generation of images.

The batch size determines the amount of VRAM required, while the batch count determines the number of iterations performed.

When it comes to generating detailed images, increasing the batch size can have benefits, but it may also require more VRAM resources.

On the other hand, raising the batch count can lead to more consistent results, but it comes at the cost of increased generation time.

In the ever-evolving field of image generation, achieving optimal system performance is crucial for delivering high-quality solutions.

A key aspect that influences system performance is striking the right balance between stable diffusion batch count and batch size.

This article aims to provide an in-depth analysis of these two parameters, exploring their relationship and impact on overall system efficiency, reliability, and scalability.

First, we will define stable diffusion batch count and batch size, highlighting their respective roles in system design and implementation.

Then, we will delve into the trade-offs and challenges associated with adjusting these parameters, emphasizing the significance of finding the optimal equilibrium to achieve the desired system performance.

Understanding Stable Diffusion Batch Size and Its Purpose:

Unveiling the Enchantment of Batch Size:

Imagine batch size as the cool parameter that determines how many samples are processed at once during the algorithm’s training. It’s like a power booster for efficiency and effectiveness!

So, when it comes to Stable Diffusion, batch size plays a pivotal role in generating those awe-inspiring images. A larger batch size translates to faster processing as it can handle more samples concurrently.

However, there’s a catch—it requires more memory and computational resources. Think of it as having a hearty appetite that needs satisfying.

But fear not! If you’re working with limited resources, a smaller batch size can come to the rescue. It reduces memory and computational demands, making it suitable for systems with constraints.

However, there’s a trade-off: smaller batches mean slower convergence and potentially reduced accuracy. It’s like savoring smaller bites of data, but it might introduce some noise along the way.

The key lies in finding the perfect balance between batch size, learning rate, and the number of iterations. It’s like orchestrating a symphony to optimize the performance of Stable Diffusion.

Now, when it comes to choosing that initial batch size, we’ve got you covered with some industry tips. Experts recommend starting with a batch size that’s a power of two, within the range of 16 to 512. And guess what? The crowd favorite is usually the reliable 32. It’s like the Goldilocks of batch sizes—not too big and not too small, just right!

And hey, have you heard about dynamic batching? It’s a clever technique that pools requests together for parallel processing. It’s like a superhero team-up that boosts throughput and expedites tasks.

In a nutshell, batch size determines the number of images generated during Stable Diffusion training. It influences training time, memory usage, and model quality.

Bigger batch sizes are like speed demons, but they might devour your memory. Starting with a batch size of 32 is a safe bet, and dynamic batching can be your secret weapon for accelerated processing.

So, go ahead, experiment, and discover that sweet spot in batch size. Let Stable Diffusion unleash its magic, creating stunning results while ensuring your system runs seamlessly.

How to increase batch size properly?

When discussing the expansion of batch size in Stable Diffusion, it is crucial to acknowledge the potential challenges that may arise.

These challenges encompass encountering memory limits, a perceived decline in performance, increased time required for reaching the desired state, and the potential for drifting issues to manifest.

However, there’s no need to fret! By diligently monitoring the system’s performance and gradually adjusting the batch size in a controlled manner, we can effectively navigate through these challenges.

For a better understanding and to achieve desired system performance while increasing batch size in Stable Diffusion, you may follow the tips:

Utilize dynamic batching to enhance throughput by parallel processing requests.

Gradually increase the batch size while monitoring system performance to avoid memory limitations and excessive performance degradation.

Implement gradient accumulation for optimized work speed with reduced memory usage.

Ensure a near ratio between the batch size and dataset size to prevent drifting issues.

What is stable diffusion batch count?

The batch count refers to the number of batches executed, while the batch size represents the quantity of images generated in each batch.

Generating a large number of images simultaneously can result in increased VRAM usage, which may lead to errors, particularly in environments with limited VRAM.

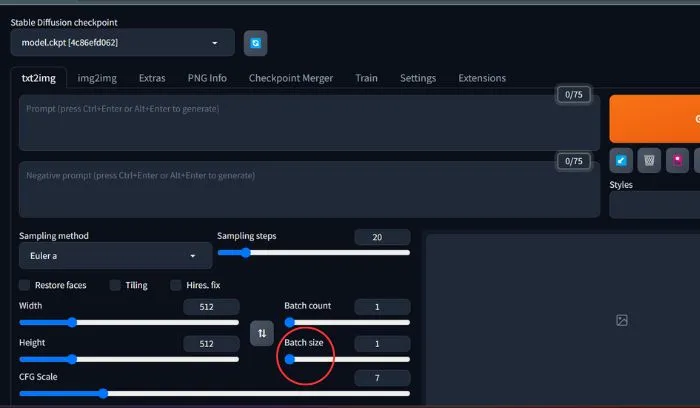

To ensure stable image generation without errors in such circumstances, it is advisable to set the batch count to specify the desired number of images and maintain a batch size of 1.

In the Stable Diffusion WebUI, you can adjust the batch count by utilizing the “Batch count” input control. However, please note that the default maximum batch count may be restricted to 100. Some users opt to use a batch size of 1 and increase the batch count to generate multiple higher-resolution images.

Now that we have covered all the key aspects of batch count and size, let’s explore their differences.

Batch Count v/s Batch Size: Which Parameter is More Important?

Now Let’s delve into the dissimilarities between batch count and batch size, and see how they affect the image generation process.

Firstly, batch count refers to the total number of generations of images produced. Essentially, it represents the number of batches performed consecutively.

On the other hand, batch size determines the quantity of images generated in each individual batch. It signifies the number of images produced simultaneously.

To better grasp this concept, let’s consider an example. Imagine setting the batch count to 10 and the batch size to 4. In this scenario, Stable Diffusion would generate a total of 40 images.

However, it would generate them in groups of 4 at a time. This means that during each iteration, 4 images would be generated, resulting in a grand total of 10 iterations.

It’s worth noting that selecting a higher batch size will demand more VRAM (Video Random Access Memory). On the other hand, a higher batch count may not necessarily require additional VRAM, as the process is executed in batches.

Moreover, choosing an optimal batch size holds significance as it can maximize GPU utilization and enhance training efficiency.

By fine-tuning the batch size, you can strike a balance that ensures efficient utilization of computing resources while achieving desired results.

Understanding the differences and optimizing their values can have a substantial impact on the efficiency and outcomes of the image generation process.

How Do You Choose?

When it comes to deciding between batch count and batch size in Stable Diffusion, it depends on your specific needs and resources. Let’s explore the factors:

- If you have limited VRAM (Virtual Random Access Memory), it’s important to take that into account. In such cases, opting for a smaller batch size would be a wise choice.

- By using a smaller batch size, you can avoid memory errors that may occur when trying to process larger batches of data. It’s like dividing your workload into smaller, more manageable chunks to fit within the available memory.

- On the other hand, if your main goal is to generate a greater number of images overall, then focusing on the batch count becomes more relevant. Increasing the batch count allows you to process more data in total.

- While a higher batch count doesn’t necessarily demand more VRAM, it does involve running the process in batches. In contrast, a higher batch size would require more VRAM since it’s directly related to the amount of memory needed for each individual batch.

So, when making your decision, it’s essential to weigh these factors carefully. Consider your VRAM limitations and the trade-off between batch size and batch count.

If you have limited VRAM, prioritize a smaller batch size to avoid memory errors.

On the other hand, if generating a larger number of images is your priority, a higher batch count may be more suitable.

By finding the optimal balance between batch size and batch count, you can make the most of your GPU’s capacity and enhance training efficiency.

FAQs

How does increasing batch size affect GPU memory?

When you increase the batch size in Stable Diffusion, it can impact GPU memory usage by requiring more resources and potentially causing VRAM to become overloaded.

So, it’s crucial to keep an eye on GPU memory usage and gradually increase the batch size to avoid these issues and ensure smooth operation.

Why batch size is always multiple of 2?

To ensure optimal performance, it’s best to make sure your mini-batch fits entirely within your CPU/GPU. Since most CPUs/GPUs have storage capacities in powers of two, it’s recommended to use mini-batch sizes that are powers of two as well.

Can the Batch size be 1?

When it comes to batch size, it should be at least one and no more than the total number of samples in the training dataset. As for the number of epochs, it can be any whole number between one and infinity.

Why do we use a batch size of 32?

In stable diffusion, the default batch size is set to 32. This choice is made to ensure compatibility with GPU memory requirements and CPU architecture. Acceptable batch sizes include 16, 32, 64, 128, 256, 512, and 1024.